Traditional Storage System

- Traditional storage systems refer to conventional, centralized storage solutions. These systems typically involve a single storage server for managing and storing data. Uses the client-server architecture where one or more client nodes are directly connected to a central server.

- Traditional storage systems are suitable for smaller-scale applications or organizations where the volume of data is relatively manageable.

- Example: In the Wikipedia search bar you search “Big Data”, the client sends a request to the Wikipedia server and displays the relative articles.

- Disadvantages:

- Data searching takes time.

- In case of failure of a centralized server, the whole database will be lost.

- If multiple users try to access the data at the same time, it may create issues.

Distributed Storage System

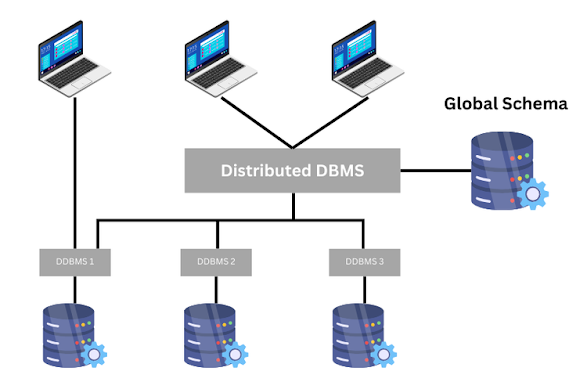

- Distributed storage systems involve the use of multiple storage devices or servers that are interconnected. Data is distributed across these devices, providing improved scalability, fault tolerance, and performance.

- Distributed storage systems are designed to handle larger volumes of data and are often more resilient to failures. They can be scaled horizontally by adding more nodes to the system.

Challenges with Big Data

- Storing huge amounts of data: Enormous amount of data generated each day. Unstructured data cannot be stored in a traditional database.

- Processing and analysis of massive data: Processing and extracting insights from big data takes time.

- Securing data

Hadoop (Solution)

- Hadoop is a distributed storage and processing framework designed for handling and analyzing large volumes of data. The Hadoop ecosystem includes the Hadoop Distributed File System (HDFS) for storage and the MapReduce programming model for distributed processing.

- Hadoop provides a scalable, fault-tolerant storage solution (HDFS) and a distributed processing model (MapReduce) that allows for parallel processing of data across a cluster of machines.

- Hadoop consists of three components that are specifically designed to work on Big Data:

- Storage Unit (HDFS)

- Process data (MapReduce)

- YARN

Hadoop Distributed File System (HDFS):

Hadoop Distributed File System (HDFS) is a distributed file system designed to store and manage very large files reliably and efficiently. It is a fundamental component of the Apache Hadoop framework, which is widely used for distributed storage of big data.

Master-Slave Architecture:

- HDFS has a master-slave architecture with two main components: the NameNode (MasterNode) and DataNodes (SlaveNode). The NameNode manages metadata and keeps track of the location of file blocks, while DataNodes store the actual data blocks.

- The NameNode is responsible for managing the metadata of the HDFS. This includes information about the directory and file structure, permissions, and the location of data blocks.

- Secondary NameNode is a node that maintain the copies of editlog and fsimage. It combine them both to get an updated version of the fsimage.

HDFS Data Blocks:

- HDFS divides large files into smaller blocks (typically 128 MB in size) and distributes these blocks across multiple nodes in a Hadoop cluster.

- HDFS provides fault tolerance by replicating data across multiple nodes in the cluster. Each data block is typically replicated three times, and if a node fails, the system can retrieve the data from other nodes where it is stored.

HDFS Rack Awareness:

Rack is a collection of 30-40 DataNodes. Rack Awareness improves fault tolerance by ensuring that replicas of a data block are stored on different racks. This strategy minimizes the risk of data loss in case an entire rack or network switch fails.

HDFS Read File Mechanism:

- To read a file from HDFS, client needs to interact with NameNode.

- NameNode provides the address of the slaves where file is stored.

- Client will interact with respective DataNodes to read the file.

- NameNode also provide a token to the client which it shows to data node for authentication.

HDFS Write File Mechanism:

- To write a file into HDFS, client needs to interact with NameNode.

- NameNode provides the address of the slave on which client will start writing the data.

- As soon as client finishes writing the block, the slave starts copying the block to another slave which in tern copy the block to another slave.

- After required replicas are created then it will send the acknowledge to the client.